mirror of

https://github.com/mgerb/mywebsite

synced 2026-03-05 15:55:25 +00:00

updated bunch of file paths and changed the way posts are loaded

This commit is contained in:

1301

node_modules/mongodb/HISTORY.md

generated

vendored

Normal file

1301

node_modules/mongodb/HISTORY.md

generated

vendored

Normal file

File diff suppressed because it is too large

Load Diff

201

node_modules/mongodb/LICENSE

generated

vendored

Normal file

201

node_modules/mongodb/LICENSE

generated

vendored

Normal file

@@ -0,0 +1,201 @@

|

||||

Apache License

|

||||

Version 2.0, January 2004

|

||||

http://www.apache.org/licenses/

|

||||

|

||||

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

||||

|

||||

1. Definitions.

|

||||

|

||||

"License" shall mean the terms and conditions for use, reproduction,

|

||||

and distribution as defined by Sections 1 through 9 of this document.

|

||||

|

||||

"Licensor" shall mean the copyright owner or entity authorized by

|

||||

the copyright owner that is granting the License.

|

||||

|

||||

"Legal Entity" shall mean the union of the acting entity and all

|

||||

other entities that control, are controlled by, or are under common

|

||||

control with that entity. For the purposes of this definition,

|

||||

"control" means (i) the power, direct or indirect, to cause the

|

||||

direction or management of such entity, whether by contract or

|

||||

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

||||

outstanding shares, or (iii) beneficial ownership of such entity.

|

||||

|

||||

"You" (or "Your") shall mean an individual or Legal Entity

|

||||

exercising permissions granted by this License.

|

||||

|

||||

"Source" form shall mean the preferred form for making modifications,

|

||||

including but not limited to software source code, documentation

|

||||

source, and configuration files.

|

||||

|

||||

"Object" form shall mean any form resulting from mechanical

|

||||

transformation or translation of a Source form, including but

|

||||

not limited to compiled object code, generated documentation,

|

||||

and conversions to other media types.

|

||||

|

||||

"Work" shall mean the work of authorship, whether in Source or

|

||||

Object form, made available under the License, as indicated by a

|

||||

copyright notice that is included in or attached to the work

|

||||

(an example is provided in the Appendix below).

|

||||

|

||||

"Derivative Works" shall mean any work, whether in Source or Object

|

||||

form, that is based on (or derived from) the Work and for which the

|

||||

editorial revisions, annotations, elaborations, or other modifications

|

||||

represent, as a whole, an original work of authorship. For the purposes

|

||||

of this License, Derivative Works shall not include works that remain

|

||||

separable from, or merely link (or bind by name) to the interfaces of,

|

||||

the Work and Derivative Works thereof.

|

||||

|

||||

"Contribution" shall mean any work of authorship, including

|

||||

the original version of the Work and any modifications or additions

|

||||

to that Work or Derivative Works thereof, that is intentionally

|

||||

submitted to Licensor for inclusion in the Work by the copyright owner

|

||||

or by an individual or Legal Entity authorized to submit on behalf of

|

||||

the copyright owner. For the purposes of this definition, "submitted"

|

||||

means any form of electronic, verbal, or written communication sent

|

||||

to the Licensor or its representatives, including but not limited to

|

||||

communication on electronic mailing lists, source code control systems,

|

||||

and issue tracking systems that are managed by, or on behalf of, the

|

||||

Licensor for the purpose of discussing and improving the Work, but

|

||||

excluding communication that is conspicuously marked or otherwise

|

||||

designated in writing by the copyright owner as "Not a Contribution."

|

||||

|

||||

"Contributor" shall mean Licensor and any individual or Legal Entity

|

||||

on behalf of whom a Contribution has been received by Licensor and

|

||||

subsequently incorporated within the Work.

|

||||

|

||||

2. Grant of Copyright License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

copyright license to reproduce, prepare Derivative Works of,

|

||||

publicly display, publicly perform, sublicense, and distribute the

|

||||

Work and such Derivative Works in Source or Object form.

|

||||

|

||||

3. Grant of Patent License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

(except as stated in this section) patent license to make, have made,

|

||||

use, offer to sell, sell, import, and otherwise transfer the Work,

|

||||

where such license applies only to those patent claims licensable

|

||||

by such Contributor that are necessarily infringed by their

|

||||

Contribution(s) alone or by combination of their Contribution(s)

|

||||

with the Work to which such Contribution(s) was submitted. If You

|

||||

institute patent litigation against any entity (including a

|

||||

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

||||

or a Contribution incorporated within the Work constitutes direct

|

||||

or contributory patent infringement, then any patent licenses

|

||||

granted to You under this License for that Work shall terminate

|

||||

as of the date such litigation is filed.

|

||||

|

||||

4. Redistribution. You may reproduce and distribute copies of the

|

||||

Work or Derivative Works thereof in any medium, with or without

|

||||

modifications, and in Source or Object form, provided that You

|

||||

meet the following conditions:

|

||||

|

||||

(a) You must give any other recipients of the Work or

|

||||

Derivative Works a copy of this License; and

|

||||

|

||||

(b) You must cause any modified files to carry prominent notices

|

||||

stating that You changed the files; and

|

||||

|

||||

(c) You must retain, in the Source form of any Derivative Works

|

||||

that You distribute, all copyright, patent, trademark, and

|

||||

attribution notices from the Source form of the Work,

|

||||

excluding those notices that do not pertain to any part of

|

||||

the Derivative Works; and

|

||||

|

||||

(d) If the Work includes a "NOTICE" text file as part of its

|

||||

distribution, then any Derivative Works that You distribute must

|

||||

include a readable copy of the attribution notices contained

|

||||

within such NOTICE file, excluding those notices that do not

|

||||

pertain to any part of the Derivative Works, in at least one

|

||||

of the following places: within a NOTICE text file distributed

|

||||

as part of the Derivative Works; within the Source form or

|

||||

documentation, if provided along with the Derivative Works; or,

|

||||

within a display generated by the Derivative Works, if and

|

||||

wherever such third-party notices normally appear. The contents

|

||||

of the NOTICE file are for informational purposes only and

|

||||

do not modify the License. You may add Your own attribution

|

||||

notices within Derivative Works that You distribute, alongside

|

||||

or as an addendum to the NOTICE text from the Work, provided

|

||||

that such additional attribution notices cannot be construed

|

||||

as modifying the License.

|

||||

|

||||

You may add Your own copyright statement to Your modifications and

|

||||

may provide additional or different license terms and conditions

|

||||

for use, reproduction, or distribution of Your modifications, or

|

||||

for any such Derivative Works as a whole, provided Your use,

|

||||

reproduction, and distribution of the Work otherwise complies with

|

||||

the conditions stated in this License.

|

||||

|

||||

5. Submission of Contributions. Unless You explicitly state otherwise,

|

||||

any Contribution intentionally submitted for inclusion in the Work

|

||||

by You to the Licensor shall be under the terms and conditions of

|

||||

this License, without any additional terms or conditions.

|

||||

Notwithstanding the above, nothing herein shall supersede or modify

|

||||

the terms of any separate license agreement you may have executed

|

||||

with Licensor regarding such Contributions.

|

||||

|

||||

6. Trademarks. This License does not grant permission to use the trade

|

||||

names, trademarks, service marks, or product names of the Licensor,

|

||||

except as required for reasonable and customary use in describing the

|

||||

origin of the Work and reproducing the content of the NOTICE file.

|

||||

|

||||

7. Disclaimer of Warranty. Unless required by applicable law or

|

||||

agreed to in writing, Licensor provides the Work (and each

|

||||

Contributor provides its Contributions) on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

||||

implied, including, without limitation, any warranties or conditions

|

||||

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

||||

PARTICULAR PURPOSE. You are solely responsible for determining the

|

||||

appropriateness of using or redistributing the Work and assume any

|

||||

risks associated with Your exercise of permissions under this License.

|

||||

|

||||

8. Limitation of Liability. In no event and under no legal theory,

|

||||

whether in tort (including negligence), contract, or otherwise,

|

||||

unless required by applicable law (such as deliberate and grossly

|

||||

negligent acts) or agreed to in writing, shall any Contributor be

|

||||

liable to You for damages, including any direct, indirect, special,

|

||||

incidental, or consequential damages of any character arising as a

|

||||

result of this License or out of the use or inability to use the

|

||||

Work (including but not limited to damages for loss of goodwill,

|

||||

work stoppage, computer failure or malfunction, or any and all

|

||||

other commercial damages or losses), even if such Contributor

|

||||

has been advised of the possibility of such damages.

|

||||

|

||||

9. Accepting Warranty or Additional Liability. While redistributing

|

||||

the Work or Derivative Works thereof, You may choose to offer,

|

||||

and charge a fee for, acceptance of support, warranty, indemnity,

|

||||

or other liability obligations and/or rights consistent with this

|

||||

License. However, in accepting such obligations, You may act only

|

||||

on Your own behalf and on Your sole responsibility, not on behalf

|

||||

of any other Contributor, and only if You agree to indemnify,

|

||||

defend, and hold each Contributor harmless for any liability

|

||||

incurred by, or claims asserted against, such Contributor by reason

|

||||

of your accepting any such warranty or additional liability.

|

||||

|

||||

END OF TERMS AND CONDITIONS

|

||||

|

||||

APPENDIX: How to apply the Apache License to your work.

|

||||

|

||||

To apply the Apache License to your work, attach the following

|

||||

boilerplate notice, with the fields enclosed by brackets "{}"

|

||||

replaced with your own identifying information. (Don't include

|

||||

the brackets!) The text should be enclosed in the appropriate

|

||||

comment syntax for the file format. We also recommend that a

|

||||

file or class name and description of purpose be included on the

|

||||

same "printed page" as the copyright notice for easier

|

||||

identification within third-party archives.

|

||||

|

||||

Copyright {yyyy} {name of copyright owner}

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

11

node_modules/mongodb/Makefile

generated

vendored

Normal file

11

node_modules/mongodb/Makefile

generated

vendored

Normal file

@@ -0,0 +1,11 @@

|

||||

NODE = node

|

||||

NPM = npm

|

||||

JSDOC = jsdoc

|

||||

name = all

|

||||

|

||||

generate_docs:

|

||||

# cp -R ./HISTORY.md ./docs/content/meta/release-notes.md

|

||||

hugo -s docs/reference -d ../../public

|

||||

$(JSDOC) -c conf.json -t docs/jsdoc-template/ -d ./public/api

|

||||

cp -R ./public/api/scripts ./public/.

|

||||

cp -R ./public/api/styles ./public/.

|

||||

415

node_modules/mongodb/README.md

generated

vendored

Normal file

415

node_modules/mongodb/README.md

generated

vendored

Normal file

@@ -0,0 +1,415 @@

|

||||

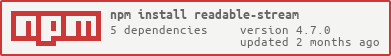

[](https://nodei.co/npm/mongodb/) [](https://nodei.co/npm/mongodb/)

|

||||

|

||||

[](http://travis-ci.org/mongodb/node-mongodb-native)

|

||||

|

||||

[](https://gitter.im/mongodb/node-mongodb-native?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge)

|

||||

|

||||

# Description

|

||||

|

||||

The MongoDB driver is the high level part of the 2.1 or higher MongoDB driver and is meant for end users.

|

||||

|

||||

## MongoDB Node.JS Driver

|

||||

|

||||

| what | where |

|

||||

|---------------|------------------------------------------------|

|

||||

| documentation | http://mongodb.github.io/node-mongodb-native/ |

|

||||

| api-doc | http://mongodb.github.io/node-mongodb-native/2.1/api/ |

|

||||

| source | https://github.com/mongodb/node-mongodb-native |

|

||||

| mongodb | http://www.mongodb.org/ |

|

||||

|

||||

### Blogs of Engineers involved in the driver

|

||||

- Christian Kvalheim [@christkv](https://twitter.com/christkv) <http://christiankvalheim.com>

|

||||

|

||||

### Bugs / Feature Requests

|

||||

|

||||

Think you’ve found a bug? Want to see a new feature in node-mongodb-native? Please open a

|

||||

case in our issue management tool, JIRA:

|

||||

|

||||

- Create an account and login <https://jira.mongodb.org>.

|

||||

- Navigate to the NODE project <https://jira.mongodb.org/browse/NODE>.

|

||||

- Click **Create Issue** - Please provide as much information as possible about the issue type and how to reproduce it.

|

||||

|

||||

Bug reports in JIRA for all driver projects (i.e. NODE, PYTHON, CSHARP, JAVA) and the

|

||||

Core Server (i.e. SERVER) project are **public**.

|

||||

|

||||

### Questions and Bug Reports

|

||||

|

||||

* mailing list: https://groups.google.com/forum/#!forum/node-mongodb-native

|

||||

* jira: http://jira.mongodb.org/

|

||||

|

||||

### Change Log

|

||||

|

||||

http://jira.mongodb.org/browse/NODE

|

||||

|

||||

# Installation

|

||||

|

||||

The recommended way to get started using the Node.js 2.0 driver is by using the `NPM` (Node Package Manager) to install the dependency in your project.

|

||||

|

||||

## MongoDB Driver

|

||||

|

||||

Given that you have created your own project using `npm init` we install the mongodb driver and it's dependencies by executing the following `NPM` command.

|

||||

|

||||

```

|

||||

npm install mongodb --save

|

||||

```

|

||||

|

||||

This will download the MongoDB driver and add a dependency entry in your `package.json` file.

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

The MongoDB driver depends on several other packages. These are.

|

||||

|

||||

* mongodb-core

|

||||

* bson

|

||||

* kerberos

|

||||

* node-gyp

|

||||

|

||||

The `kerberos` package is a C++ extension that requires a build environment to be installed on your system. You must be able to build node.js itself to be able to compile and install the `kerberos` module. Furthermore the `kerberos` module requires the MIT Kerberos package to correctly compile on UNIX operating systems. Consult your UNIX operation system package manager what libraries to install.

|

||||

|

||||

{{% note class="important" %}}

|

||||

Windows already contains the SSPI API used for Kerberos authentication. However you will need to install a full compiler tool chain using visual studio C++ to correctly install the kerberos extension.

|

||||

{{% /note %}}

|

||||

|

||||

### Diagnosing on UNIX

|

||||

|

||||

If you don’t have the build essentials it won’t build. In the case of linux you will need gcc and g++, node.js with all the headers and python. The easiest way to figure out what’s missing is by trying to build the kerberos project. You can do this by performing the following steps.

|

||||

|

||||

```

|

||||

git clone https://github.com/christkv/kerberos.git

|

||||

cd kerberos

|

||||

npm install

|

||||

```

|

||||

|

||||

If all the steps complete you have the right toolchain installed. If you get node-gyp not found you need to install it globally by doing.

|

||||

|

||||

```

|

||||

npm install -g node-gyp

|

||||

```

|

||||

|

||||

If correctly compiles and runs the tests you are golden. We can now try to install the mongod driver by performing the following command.

|

||||

|

||||

```

|

||||

cd yourproject

|

||||

npm install mongodb --save

|

||||

```

|

||||

|

||||

If it still fails the next step is to examine the npm log. Rerun the command but in this case in verbose mode.

|

||||

|

||||

```

|

||||

npm --loglevel verbose install mongodb

|

||||

```

|

||||

|

||||

This will print out all the steps npm is performing while trying to install the module.

|

||||

|

||||

### Diagnosing on Windows

|

||||

|

||||

A known compiler tool chain known to work for compiling `kerberos` on windows is the following.

|

||||

|

||||

* Visual Studio c++ 2010 (do not use higher versions)

|

||||

* Windows 7 64bit SDK

|

||||

* Python 2.7 or higher

|

||||

|

||||

Open visual studio command prompt. Ensure node.exe is in your path and install node-gyp.

|

||||

|

||||

```

|

||||

npm install -g node-gyp

|

||||

```

|

||||

|

||||

Next you will have to build the project manually to test it. Use any tool you use with git and grab the repo.

|

||||

|

||||

```

|

||||

git clone https://github.com/christkv/kerberos.git

|

||||

cd kerberos

|

||||

npm install

|

||||

node-gyp rebuild

|

||||

```

|

||||

|

||||

This should rebuild the driver successfully if you have everything set up correctly.

|

||||

|

||||

### Other possible issues

|

||||

|

||||

Your python installation might be hosed making gyp break. I always recommend that you test your deployment environment first by trying to build node itself on the server in question as this should unearth any issues with broken packages (and there are a lot of broken packages out there).

|

||||

|

||||

Another thing is to ensure your user has write permission to wherever the node modules are being installed.

|

||||

|

||||

QuickStart

|

||||

==========

|

||||

The quick start guide will show you how to setup a simple application using node.js and MongoDB. Its scope is only how to set up the driver and perform the simple crud operations. For more in depth coverage we encourage reading the tutorials.

|

||||

|

||||

Create the package.json file

|

||||

----------------------------

|

||||

Let's create a directory where our application will live. In our case we will put this under our projects directory.

|

||||

|

||||

```

|

||||

mkdir myproject

|

||||

cd myproject

|

||||

```

|

||||

|

||||

Enter the following command and answer the questions to create the initial structure for your new project

|

||||

|

||||

```

|

||||

npm init

|

||||

```

|

||||

|

||||

Next we need to edit the generated package.json file to add the dependency for the MongoDB driver. The package.json file below is just an example and your will look different depending on how you answered the questions after entering `npm init`

|

||||

|

||||

```

|

||||

{

|

||||

"name": "myproject",

|

||||

"version": "1.0.0",

|

||||

"description": "My first project",

|

||||

"main": "index.js",

|

||||

"repository": {

|

||||

"type": "git",

|

||||

"url": "git://github.com/christkv/myfirstproject.git"

|

||||

},

|

||||

"dependencies": {

|

||||

"mongodb": "~2.0"

|

||||

},

|

||||

"author": "Christian Kvalheim",

|

||||

"license": "Apache 2.0",

|

||||

"bugs": {

|

||||

"url": "https://github.com/christkv/myfirstproject/issues"

|

||||

},

|

||||

"homepage": "https://github.com/christkv/myfirstproject"

|

||||

}

|

||||

```

|

||||

|

||||

Save the file and return to the shell or command prompt and use **NPM** to install all the dependencies.

|

||||

|

||||

```

|

||||

npm install

|

||||

```

|

||||

|

||||

You should see **NPM** download a lot of files. Once it's done you'll find all the downloaded packages under the **node_modules** directory.

|

||||

|

||||

Booting up a MongoDB Server

|

||||

---------------------------

|

||||

Let's boot up a MongoDB server instance. Download the right MongoDB version from [MongoDB](http://www.mongodb.org), open a new shell or command line and ensure the **mongod** command is in the shell or command line path. Now let's create a database directory (in our case under **/data**).

|

||||

|

||||

```

|

||||

mongod --dbpath=/data --port 27017

|

||||

```

|

||||

|

||||

You should see the **mongod** process start up and print some status information.

|

||||

|

||||

Connecting to MongoDB

|

||||

---------------------

|

||||

Let's create a new **app.js** file that we will use to show the basic CRUD operations using the MongoDB driver.

|

||||

|

||||

First let's add code to connect to the server and the database **myproject**.

|

||||

|

||||

```js

|

||||

var MongoClient = require('mongodb').MongoClient

|

||||

, assert = require('assert');

|

||||

|

||||

// Connection URL

|

||||

var url = 'mongodb://localhost:27017/myproject';

|

||||

// Use connect method to connect to the Server

|

||||

MongoClient.connect(url, function(err, db) {

|

||||

assert.equal(null, err);

|

||||

console.log("Connected correctly to server");

|

||||

|

||||

db.close();

|

||||

});

|

||||

```

|

||||

|

||||

Given that you booted up the **mongod** process earlier the application should connect successfully and print **Connected correctly to server** to the console.

|

||||

|

||||

Let's Add some code to show the different CRUD operations available.

|

||||

|

||||

Inserting a Document

|

||||

--------------------

|

||||

Let's create a function that will insert some documents for us.

|

||||

|

||||

```js

|

||||

var insertDocuments = function(db, callback) {

|

||||

// Get the documents collection

|

||||

var collection = db.collection('documents');

|

||||

// Insert some documents

|

||||

collection.insertMany([

|

||||

{a : 1}, {a : 2}, {a : 3}

|

||||

], function(err, result) {

|

||||

assert.equal(err, null);

|

||||

assert.equal(3, result.result.n);

|

||||

assert.equal(3, result.ops.length);

|

||||

console.log("Inserted 3 documents into the document collection");

|

||||

callback(result);

|

||||

});

|

||||

}

|

||||

```

|

||||

|

||||

The insert command will return a results object that contains several fields that might be useful.

|

||||

|

||||

* **result** Contains the result document from MongoDB

|

||||

* **ops** Contains the documents inserted with added **_id** fields

|

||||

* **connection** Contains the connection used to perform the insert

|

||||

|

||||

Let's add call the **insertDocuments** command to the **MongoClient.connect** method callback.

|

||||

|

||||

```js

|

||||

var MongoClient = require('mongodb').MongoClient

|

||||

, assert = require('assert');

|

||||

|

||||

// Connection URL

|

||||

var url = 'mongodb://localhost:27017/myproject';

|

||||

// Use connect method to connect to the Server

|

||||

MongoClient.connect(url, function(err, db) {

|

||||

assert.equal(null, err);

|

||||

console.log("Connected correctly to server");

|

||||

|

||||

insertDocuments(db, function() {

|

||||

db.close();

|

||||

});

|

||||

});

|

||||

```

|

||||

|

||||

We can now run the update **app.js** file.

|

||||

|

||||

```

|

||||

node app.js

|

||||

```

|

||||

|

||||

You should see the following output after running the **app.js** file.

|

||||

|

||||

```

|

||||

Connected correctly to server

|

||||

Inserted 3 documents into the document collection

|

||||

```

|

||||

|

||||

Updating a document

|

||||

-------------------

|

||||

Let's look at how to do a simple document update by adding a new field **b** to the document that has the field **a** set to **2**.

|

||||

|

||||

```js

|

||||

var updateDocument = function(db, callback) {

|

||||

// Get the documents collection

|

||||

var collection = db.collection('documents');

|

||||

// Update document where a is 2, set b equal to 1

|

||||

collection.updateOne({ a : 2 }

|

||||

, { $set: { b : 1 } }, function(err, result) {

|

||||

assert.equal(err, null);

|

||||

assert.equal(1, result.result.n);

|

||||

console.log("Updated the document with the field a equal to 2");

|

||||

callback(result);

|

||||

});

|

||||

}

|

||||

```

|

||||

|

||||

The method will update the first document where the field **a** is equal to **2** by adding a new field **b** to the document set to **1**. Let's update the callback function from **MongoClient.connect** to include the update method.

|

||||

|

||||

```js

|

||||

var MongoClient = require('mongodb').MongoClient

|

||||

, assert = require('assert');

|

||||

|

||||

// Connection URL

|

||||

var url = 'mongodb://localhost:27017/myproject';

|

||||

// Use connect method to connect to the Server

|

||||

MongoClient.connect(url, function(err, db) {

|

||||

assert.equal(null, err);

|

||||

console.log("Connected correctly to server");

|

||||

|

||||

insertDocuments(db, function() {

|

||||

updateDocument(db, function() {

|

||||

db.close();

|

||||

});

|

||||

});

|

||||

});

|

||||

```

|

||||

|

||||

Delete a document

|

||||

-----------------

|

||||

Next lets delete the document where the field **a** equals to **3**.

|

||||

|

||||

```js

|

||||

var deleteDocument = function(db, callback) {

|

||||

// Get the documents collection

|

||||

var collection = db.collection('documents');

|

||||

// Insert some documents

|

||||

collection.deleteOne({ a : 3 }, function(err, result) {

|

||||

assert.equal(err, null);

|

||||

assert.equal(1, result.result.n);

|

||||

console.log("Removed the document with the field a equal to 3");

|

||||

callback(result);

|

||||

});

|

||||

}

|

||||

```

|

||||

|

||||

This will delete the first document where the field **a** equals to **3**. Let's add the method to the **MongoClient

|

||||

.connect** callback function.

|

||||

|

||||

```js

|

||||

var MongoClient = require('mongodb').MongoClient

|

||||

, assert = require('assert');

|

||||

|

||||

// Connection URL

|

||||

var url = 'mongodb://localhost:27017/myproject';

|

||||

// Use connect method to connect to the Server

|

||||

MongoClient.connect(url, function(err, db) {

|

||||

assert.equal(null, err);

|

||||

console.log("Connected correctly to server");

|

||||

|

||||

insertDocuments(db, function() {

|

||||

updateDocument(db, function() {

|

||||

deleteDocument(db, function() {

|

||||

db.close();

|

||||

});

|

||||

});

|

||||

});

|

||||

});

|

||||

```

|

||||

|

||||

Finally let's retrieve all the documents using a simple find.

|

||||

|

||||

Find All Documents

|

||||

------------------

|

||||

We will finish up the Quickstart CRUD methods by performing a simple query that returns all the documents matching the query.

|

||||

|

||||

```js

|

||||

var findDocuments = function(db, callback) {

|

||||

// Get the documents collection

|

||||

var collection = db.collection('documents');

|

||||

// Find some documents

|

||||

collection.find({}).toArray(function(err, docs) {

|

||||

assert.equal(err, null);

|

||||

assert.equal(2, docs.length);

|

||||

console.log("Found the following records");

|

||||

console.dir(docs);

|

||||

callback(docs);

|

||||

});

|

||||

}

|

||||

```

|

||||

|

||||

This query will return all the documents in the **documents** collection. Since we deleted a document the total

|

||||

documents returned is **2**. Finally let's add the findDocument method to the **MongoClient.connect** callback.

|

||||

|

||||

```js

|

||||

var MongoClient = require('mongodb').MongoClient

|

||||

, assert = require('assert');

|

||||

|

||||

// Connection URL

|

||||

var url = 'mongodb://localhost:27017/myproject';

|

||||

// Use connect method to connect to the Server

|

||||

MongoClient.connect(url, function(err, db) {

|

||||

assert.equal(null, err);

|

||||

console.log("Connected correctly to server");

|

||||

|

||||

insertDocuments(db, function() {

|

||||

updateDocument(db, function() {

|

||||

deleteDocument(db, function() {

|

||||

findDocuments(db, function() {

|

||||

db.close();

|

||||

});

|

||||

});

|

||||

});

|

||||

});

|

||||

});

|

||||

```

|

||||

|

||||

This concludes the QuickStart of connecting and performing some Basic operations using the MongoDB Node.js driver. For more detailed information you can look at the tutorials covering more specific topics of interest.

|

||||

|

||||

## Next Steps

|

||||

|

||||

* [MongoDB Documentation](http://mongodb.org/)

|

||||

* [Read about Schemas](http://learnmongodbthehardway.com/)

|

||||

* [Star us on GitHub](https://github.com/mongodb/node-mongodb-native)

|

||||

71

node_modules/mongodb/conf.json

generated

vendored

Normal file

71

node_modules/mongodb/conf.json

generated

vendored

Normal file

@@ -0,0 +1,71 @@

|

||||

{

|

||||

"plugins": ["plugins/markdown", "docs/lib/jsdoc/examples_plugin.js"],

|

||||

"source": {

|

||||

"include": [

|

||||

"test/functional/operation_example_tests.js",

|

||||

"test/functional/operation_promises_example_tests.js",

|

||||

"test/functional/operation_generators_example_tests.js",

|

||||

"test/functional/authentication_tests.js",

|

||||

"test/functional/gridfs_stream_tests.js",

|

||||

"lib/admin.js",

|

||||

"lib/collection.js",

|

||||

"lib/cursor.js",

|

||||

"lib/aggregation_cursor.js",

|

||||

"lib/command_cursor.js",

|

||||

"lib/db.js",

|

||||

"lib/mongo_client.js",

|

||||

"lib/mongos.js",

|

||||

"lib/read_preference.js",

|

||||

"lib/replset.js",

|

||||

"lib/server.js",

|

||||

"lib/bulk/common.js",

|

||||

"lib/bulk/ordered.js",

|

||||

"lib/bulk/unordered.js",

|

||||

"lib/gridfs/grid_store.js",

|

||||

"node_modules/mongodb-core/lib/error.js",

|

||||

"lib/gridfs-stream/index.js",

|

||||

"node_modules/mongodb-core/lib/connection/logger.js",

|

||||

"node_modules/bson/lib/bson/binary.js",

|

||||

"node_modules/bson/lib/bson/code.js",

|

||||

"node_modules/bson/lib/bson/db_ref.js",

|

||||

"node_modules/bson/lib/bson/double.js",

|

||||

"node_modules/bson/lib/bson/long.js",

|

||||

"node_modules/bson/lib/bson/objectid.js",

|

||||

"node_modules/bson/lib/bson/symbol.js",

|

||||

"node_modules/bson/lib/bson/timestamp.js",

|

||||

"node_modules/bson/lib/bson/max_key.js",

|

||||

"node_modules/bson/lib/bson/min_key.js"

|

||||

]

|

||||

},

|

||||

"templates": {

|

||||

"cleverLinks": true,

|

||||

"monospaceLinks": true,

|

||||

"default": {

|

||||

"outputSourceFiles" : true

|

||||

},

|

||||

"applicationName": "Node.js MongoDB Driver API",

|

||||

"disqus": true,

|

||||

"googleAnalytics": "UA-29229787-1",

|

||||

"openGraph": {

|

||||

"title": "",

|

||||

"type": "website",

|

||||

"image": "",

|

||||

"site_name": "",

|

||||

"url": ""

|

||||

},

|

||||

"meta": {

|

||||

"title": "",

|

||||

"description": "",

|

||||

"keyword": ""

|

||||

},

|

||||

"linenums": true

|

||||

},

|

||||

"markdown": {

|

||||

"parser": "gfm",

|

||||

"hardwrap": true,

|

||||

"tags": ["examples"]

|

||||

},

|

||||

"examples": {

|

||||

"indent": 4

|

||||

}

|

||||

}

|

||||

50

node_modules/mongodb/index.js

generated

vendored

Normal file

50

node_modules/mongodb/index.js

generated

vendored

Normal file

@@ -0,0 +1,50 @@

|

||||

// Core module

|

||||

var core = require('mongodb-core'),

|

||||

Instrumentation = require('./lib/apm');

|

||||

|

||||

// Set up the connect function

|

||||

var connect = require('./lib/mongo_client').connect;

|

||||

|

||||

// Expose error class

|

||||

connect.MongoError = core.MongoError;

|

||||

|

||||

// Actual driver classes exported

|

||||

connect.Admin = require('./lib/admin');

|

||||

connect.MongoClient = require('./lib/mongo_client');

|

||||

connect.Db = require('./lib/db');

|

||||

connect.Collection = require('./lib/collection');

|

||||

connect.Server = require('./lib/server');

|

||||

connect.ReplSet = require('./lib/replset');

|

||||

connect.Mongos = require('./lib/mongos');

|

||||

connect.ReadPreference = require('./lib/read_preference');

|

||||

connect.GridStore = require('./lib/gridfs/grid_store');

|

||||

connect.Chunk = require('./lib/gridfs/chunk');

|

||||

connect.Logger = core.Logger;

|

||||

connect.Cursor = require('./lib/cursor');

|

||||

connect.GridFSBucket = require('./lib/gridfs-stream');

|

||||

|

||||

// BSON types exported

|

||||

connect.Binary = core.BSON.Binary;

|

||||

connect.Code = core.BSON.Code;

|

||||

connect.Map = core.BSON.Map;

|

||||

connect.DBRef = core.BSON.DBRef;

|

||||

connect.Double = core.BSON.Double;

|

||||

connect.Long = core.BSON.Long;

|

||||

connect.MinKey = core.BSON.MinKey;

|

||||

connect.MaxKey = core.BSON.MaxKey;

|

||||

connect.ObjectID = core.BSON.ObjectID;

|

||||

connect.ObjectId = core.BSON.ObjectID;

|

||||

connect.Symbol = core.BSON.Symbol;

|

||||

connect.Timestamp = core.BSON.Timestamp;

|

||||

|

||||

// Add connect method

|

||||

connect.connect = connect;

|

||||

|

||||

// Set up the instrumentation method

|

||||

connect.instrument = function(options, callback) {

|

||||

if(typeof options == 'function') callback = options, options = {};

|

||||

return new Instrumentation(core, options, callback);

|

||||

}

|

||||

|

||||

// Set our exports to be the connect function

|

||||

module.exports = connect;

|

||||

581

node_modules/mongodb/lib/admin.js

generated

vendored

Normal file

581

node_modules/mongodb/lib/admin.js

generated

vendored

Normal file

@@ -0,0 +1,581 @@

|

||||

"use strict";

|

||||

|

||||

var toError = require('./utils').toError,

|

||||

Define = require('./metadata'),

|

||||

shallowClone = require('./utils').shallowClone;

|

||||

|

||||

/**

|

||||

* @fileOverview The **Admin** class is an internal class that allows convenient access to

|

||||

* the admin functionality and commands for MongoDB.

|

||||

*

|

||||

* **ADMIN Cannot directly be instantiated**

|

||||

* @example

|

||||

* var MongoClient = require('mongodb').MongoClient,

|

||||

* test = require('assert');

|

||||

* // Connection url

|

||||

* var url = 'mongodb://localhost:27017/test';

|

||||

* // Connect using MongoClient

|

||||

* MongoClient.connect(url, function(err, db) {

|

||||

* // Use the admin database for the operation

|

||||

* var adminDb = db.admin();

|

||||

*

|

||||

* // List all the available databases

|

||||

* adminDb.listDatabases(function(err, dbs) {

|

||||

* test.equal(null, err);

|

||||

* test.ok(dbs.databases.length > 0);

|

||||

* db.close();

|

||||

* });

|

||||

* });

|

||||

*/

|

||||

|

||||

/**

|

||||

* Create a new Admin instance (INTERNAL TYPE, do not instantiate directly)

|

||||

* @class

|

||||

* @return {Admin} a collection instance.

|

||||

*/

|

||||

var Admin = function(db, topology, promiseLibrary) {

|

||||

if(!(this instanceof Admin)) return new Admin(db, topology);

|

||||

var self = this;

|

||||

|

||||

// Internal state

|

||||

this.s = {

|

||||

db: db

|

||||

, topology: topology

|

||||

, promiseLibrary: promiseLibrary

|

||||

}

|

||||

}

|

||||

|

||||

var define = Admin.define = new Define('Admin', Admin, false);

|

||||

|

||||

/**

|

||||

* The callback format for results

|

||||

* @callback Admin~resultCallback

|

||||

* @param {MongoError} error An error instance representing the error during the execution.

|

||||

* @param {object} result The result object if the command was executed successfully.

|

||||

*/

|

||||

|

||||

/**

|

||||

* Execute a command

|

||||

* @method

|

||||

* @param {object} command The command hash

|

||||

* @param {object} [options=null] Optional settings.

|

||||

* @param {(ReadPreference|string)} [options.readPreference=null] The preferred read preference (ReadPreference.PRIMARY, ReadPreference.PRIMARY_PREFERRED, ReadPreference.SECONDARY, ReadPreference.SECONDARY_PREFERRED, ReadPreference.NEAREST).

|

||||

* @param {number} [options.maxTimeMS=null] Number of milliseconds to wait before aborting the query.

|

||||

* @param {Admin~resultCallback} [callback] The command result callback

|

||||

* @return {Promise} returns Promise if no callback passed

|

||||

*/

|

||||

Admin.prototype.command = function(command, options, callback) {

|

||||

var self = this;

|

||||

var args = Array.prototype.slice.call(arguments, 1);

|

||||

callback = args.pop();

|

||||

if(typeof callback != 'function') args.push(callback);

|

||||

options = args.length ? args.shift() : {};

|

||||

|

||||

// Execute using callback

|

||||

if(typeof callback == 'function') return this.s.db.executeDbAdminCommand(command, options, function(err, doc) {

|

||||

return callback != null ? callback(err, doc) : null;

|

||||

});

|

||||

|

||||

// Return a Promise

|

||||

return new this.s.promiseLibrary(function(resolve, reject) {

|

||||

self.s.db.executeDbAdminCommand(command, options, function(err, doc) {

|

||||

if(err) return reject(err);

|

||||

resolve(doc);

|

||||

});

|

||||

});

|

||||

}

|

||||

|

||||

define.classMethod('command', {callback: true, promise:true});

|

||||

|

||||

/**

|

||||

* Retrieve the server information for the current

|

||||

* instance of the db client

|

||||

*

|

||||

* @param {Admin~resultCallback} [callback] The command result callback

|

||||

* @return {Promise} returns Promise if no callback passed

|

||||

*/

|

||||

Admin.prototype.buildInfo = function(callback) {

|

||||

var self = this;

|

||||

// Execute using callback

|

||||

if(typeof callback == 'function') return this.serverInfo(callback);

|

||||

|

||||

// Return a Promise

|

||||

return new this.s.promiseLibrary(function(resolve, reject) {

|

||||

self.serverInfo(function(err, r) {

|

||||

if(err) return reject(err);

|

||||

resolve(r);

|

||||

});

|

||||

});

|

||||

}

|

||||

|

||||

define.classMethod('buildInfo', {callback: true, promise:true});

|

||||

|

||||

/**

|

||||

* Retrieve the server information for the current

|

||||

* instance of the db client

|

||||

*

|

||||

* @param {Admin~resultCallback} [callback] The command result callback

|

||||

* @return {Promise} returns Promise if no callback passed

|

||||

*/

|

||||

Admin.prototype.serverInfo = function(callback) {

|

||||

var self = this;

|

||||

// Execute using callback

|

||||

if(typeof callback == 'function') return this.s.db.executeDbAdminCommand({buildinfo:1}, function(err, doc) {

|

||||

if(err != null) return callback(err, null);

|

||||

callback(null, doc);

|

||||

});

|

||||

|

||||

// Return a Promise

|

||||

return new this.s.promiseLibrary(function(resolve, reject) {

|

||||

self.s.db.executeDbAdminCommand({buildinfo:1}, function(err, doc) {

|

||||

if(err) return reject(err);

|

||||

resolve(doc);

|

||||

});

|

||||

});

|

||||

}

|

||||

|

||||

define.classMethod('serverInfo', {callback: true, promise:true});

|

||||

|

||||

/**

|

||||

* Retrieve this db's server status.

|

||||

*

|

||||

* @param {Admin~resultCallback} [callback] The command result callback

|

||||

* @return {Promise} returns Promise if no callback passed

|

||||

*/

|

||||

Admin.prototype.serverStatus = function(callback) {

|

||||

var self = this;

|

||||

|

||||

// Execute using callback

|

||||

if(typeof callback == 'function') return serverStatus(self, callback)

|

||||

|

||||

// Return a Promise

|

||||

return new this.s.promiseLibrary(function(resolve, reject) {

|

||||

serverStatus(self, function(err, r) {

|

||||

if(err) return reject(err);

|

||||

resolve(r);

|

||||

});

|

||||

});

|

||||

};

|

||||

|

||||

var serverStatus = function(self, callback) {

|

||||

self.s.db.executeDbAdminCommand({serverStatus: 1}, function(err, doc) {

|

||||

if(err == null && doc.ok === 1) {

|

||||

callback(null, doc);

|

||||

} else {

|

||||

if(err) return callback(err, false);

|

||||

return callback(toError(doc), false);

|

||||

}

|

||||

});

|

||||

}

|

||||

|

||||

define.classMethod('serverStatus', {callback: true, promise:true});

|

||||

|

||||

/**

|

||||

* Retrieve the current profiling Level for MongoDB

|

||||

*

|

||||

* @param {Admin~resultCallback} [callback] The command result callback

|

||||

* @return {Promise} returns Promise if no callback passed

|

||||

*/

|

||||

Admin.prototype.profilingLevel = function(callback) {

|

||||

var self = this;

|

||||

|

||||

// Execute using callback

|

||||

if(typeof callback == 'function') return profilingLevel(self, callback)

|

||||

|

||||

// Return a Promise

|

||||

return new this.s.promiseLibrary(function(resolve, reject) {

|

||||

profilingLevel(self, function(err, r) {

|

||||

if(err) return reject(err);

|

||||

resolve(r);

|

||||

});

|

||||

});

|

||||

};

|

||||

|

||||

var profilingLevel = function(self, callback) {

|

||||

self.s.db.executeDbAdminCommand({profile:-1}, function(err, doc) {

|

||||

doc = doc;

|

||||

|

||||

if(err == null && doc.ok === 1) {

|

||||

var was = doc.was;

|

||||

if(was == 0) return callback(null, "off");

|

||||

if(was == 1) return callback(null, "slow_only");

|

||||

if(was == 2) return callback(null, "all");

|

||||

return callback(new Error("Error: illegal profiling level value " + was), null);

|

||||

} else {

|

||||

err != null ? callback(err, null) : callback(new Error("Error with profile command"), null);

|

||||

}

|

||||

});

|

||||

}

|

||||

|

||||

define.classMethod('profilingLevel', {callback: true, promise:true});

|

||||

|

||||

/**

|

||||

* Ping the MongoDB server and retrieve results

|

||||

*

|

||||

* @param {Admin~resultCallback} [callback] The command result callback

|

||||

* @return {Promise} returns Promise if no callback passed

|

||||

*/

|

||||

Admin.prototype.ping = function(options, callback) {

|

||||

var self = this;

|

||||

var args = Array.prototype.slice.call(arguments, 0);

|

||||

callback = args.pop();

|

||||

if(typeof callback != 'function') args.push(callback);

|

||||

|

||||

// Execute using callback

|

||||

if(typeof callback == 'function') return this.s.db.executeDbAdminCommand({ping: 1}, callback);

|

||||

|

||||

// Return a Promise

|

||||

return new this.s.promiseLibrary(function(resolve, reject) {

|

||||

self.s.db.executeDbAdminCommand({ping: 1}, function(err, r) {

|

||||

if(err) return reject(err);

|

||||

resolve(r);

|

||||

});

|

||||

});

|

||||

}

|

||||

|

||||

define.classMethod('ping', {callback: true, promise:true});

|

||||

|

||||

/**

|

||||

* Authenticate a user against the server.

|

||||

* @method

|

||||

* @param {string} username The username.

|

||||

* @param {string} [password] The password.

|

||||

* @param {Admin~resultCallback} [callback] The command result callback

|

||||

* @return {Promise} returns Promise if no callback passed

|

||||

*/

|

||||

Admin.prototype.authenticate = function(username, password, options, callback) {

|

||||

var self = this;

|

||||

if(typeof options == 'function') callback = options, options = {};

|

||||

options = shallowClone(options);

|

||||

options.authdb = 'admin';

|

||||

|

||||

// Execute using callback

|

||||

if(typeof callback == 'function') return this.s.db.authenticate(username, password, options, callback);

|

||||

|

||||

// Return a Promise

|

||||

return new this.s.promiseLibrary(function(resolve, reject) {

|

||||

self.s.db.authenticate(username, password, options, function(err, r) {

|

||||

if(err) return reject(err);

|

||||

resolve(r);

|

||||

});

|

||||

});

|

||||

}

|

||||

|

||||

define.classMethod('authenticate', {callback: true, promise:true});

|

||||

|

||||

/**

|

||||

* Logout user from server, fire off on all connections and remove all auth info

|

||||

* @method

|

||||

* @param {Admin~resultCallback} [callback] The command result callback

|

||||

* @return {Promise} returns Promise if no callback passed

|

||||

*/

|

||||

Admin.prototype.logout = function(callback) {

|

||||

var self = this;

|

||||

// Execute using callback

|

||||

if(typeof callback == 'function') return this.s.db.logout({authdb: 'admin'}, callback);

|

||||

|

||||

// Return a Promise

|

||||

return new this.s.promiseLibrary(function(resolve, reject) {

|

||||

self.s.db.logout({authdb: 'admin'}, function(err, r) {

|

||||

if(err) return reject(err);

|

||||

resolve(r);

|

||||

});

|

||||

});

|

||||

}

|

||||

|

||||

define.classMethod('logout', {callback: true, promise:true});

|

||||

|

||||

// Get write concern

|

||||

var writeConcern = function(options, db) {

|

||||

options = shallowClone(options);

|

||||

|

||||

// If options already contain write concerns return it

|

||||

if(options.w || options.wtimeout || options.j || options.fsync) {

|

||||

return options;

|

||||

}

|

||||

|

||||

// Set db write concern if available

|

||||

if(db.writeConcern) {

|

||||

if(options.w) options.w = db.writeConcern.w;

|

||||

if(options.wtimeout) options.wtimeout = db.writeConcern.wtimeout;

|

||||

if(options.j) options.j = db.writeConcern.j;

|

||||

if(options.fsync) options.fsync = db.writeConcern.fsync;

|

||||

}

|

||||

|

||||

// Return modified options

|

||||

return options;

|

||||

}

|

||||

|

||||

/**

|

||||

* Add a user to the database.

|

||||

* @method

|

||||

* @param {string} username The username.

|

||||

* @param {string} password The password.

|

||||

* @param {object} [options=null] Optional settings.

|

||||

* @param {(number|string)} [options.w=null] The write concern.

|

||||

* @param {number} [options.wtimeout=null] The write concern timeout.

|

||||

* @param {boolean} [options.j=false] Specify a journal write concern.

|

||||

* @param {boolean} [options.fsync=false] Specify a file sync write concern.

|

||||

* @param {object} [options.customData=null] Custom data associated with the user (only Mongodb 2.6 or higher)

|

||||

* @param {object[]} [options.roles=null] Roles associated with the created user (only Mongodb 2.6 or higher)

|

||||

* @param {Admin~resultCallback} [callback] The command result callback

|

||||

* @return {Promise} returns Promise if no callback passed

|

||||

*/

|

||||

Admin.prototype.addUser = function(username, password, options, callback) {

|

||||

var self = this;

|

||||

var args = Array.prototype.slice.call(arguments, 2);

|

||||

callback = args.pop();

|

||||

if(typeof callback != 'function') args.push(callback);

|

||||

options = args.length ? args.shift() : {};

|

||||

options = options || {};

|

||||

// Get the options

|

||||

options = writeConcern(options, self.s.db)

|

||||

// Set the db name to admin

|

||||

options.dbName = 'admin';

|

||||

|

||||

// Execute using callback

|

||||

if(typeof callback == 'function')

|

||||

return self.s.db.addUser(username, password, options, callback);

|

||||

|

||||

// Return a Promise

|

||||

return new this.s.promiseLibrary(function(resolve, reject) {

|

||||

self.s.db.addUser(username, password, options, function(err, r) {

|

||||

if(err) return reject(err);

|

||||

resolve(r);

|

||||

});

|

||||

});

|

||||

}

|

||||

|

||||

define.classMethod('addUser', {callback: true, promise:true});

|

||||

|

||||

/**

|

||||

* Remove a user from a database

|

||||

* @method

|

||||

* @param {string} username The username.

|

||||

* @param {object} [options=null] Optional settings.

|

||||

* @param {(number|string)} [options.w=null] The write concern.

|

||||

* @param {number} [options.wtimeout=null] The write concern timeout.

|

||||

* @param {boolean} [options.j=false] Specify a journal write concern.

|

||||

* @param {boolean} [options.fsync=false] Specify a file sync write concern.

|

||||

* @param {Admin~resultCallback} [callback] The command result callback

|

||||

* @return {Promise} returns Promise if no callback passed

|

||||

*/

|

||||

Admin.prototype.removeUser = function(username, options, callback) {

|

||||

var self = this;

|

||||

var args = Array.prototype.slice.call(arguments, 1);

|

||||

callback = args.pop();

|

||||

if(typeof callback != 'function') args.push(callback);

|

||||

options = args.length ? args.shift() : {};

|

||||

options = options || {};

|

||||

// Get the options

|

||||

options = writeConcern(options, self.s.db)

|

||||

// Set the db name

|

||||

options.dbName = 'admin';

|

||||

|

||||

// Execute using callback

|

||||

if(typeof callback == 'function')

|

||||

return self.s.db.removeUser(username, options, callback);

|

||||

|

||||

// Return a Promise

|

||||

return new this.s.promiseLibrary(function(resolve, reject) {

|

||||

self.s.db.removeUser(username, options, function(err, r) {

|

||||

if(err) return reject(err);

|

||||

resolve(r);

|

||||

});

|

||||

});

|

||||

}

|

||||

|

||||

define.classMethod('removeUser', {callback: true, promise:true});

|

||||

|

||||

/**

|

||||

* Set the current profiling level of MongoDB

|

||||

*

|

||||

* @param {string} level The new profiling level (off, slow_only, all).

|

||||

* @param {Admin~resultCallback} [callback] The command result callback.

|

||||

* @return {Promise} returns Promise if no callback passed

|

||||

*/

|

||||

Admin.prototype.setProfilingLevel = function(level, callback) {

|

||||

var self = this;

|

||||

|

||||

// Execute using callback

|

||||

if(typeof callback == 'function') return setProfilingLevel(self, level, callback);

|

||||

|

||||

// Return a Promise

|

||||

return new this.s.promiseLibrary(function(resolve, reject) {

|

||||

setProfilingLevel(self, level, function(err, r) {

|

||||

if(err) return reject(err);

|

||||

resolve(r);

|

||||

});

|

||||

});

|

||||

};

|

||||

|

||||

var setProfilingLevel = function(self, level, callback) {

|

||||

var command = {};

|

||||

var profile = 0;

|

||||

|

||||

if(level == "off") {

|

||||

profile = 0;

|

||||

} else if(level == "slow_only") {

|

||||

profile = 1;

|

||||

} else if(level == "all") {

|

||||

profile = 2;

|

||||

} else {

|

||||

return callback(new Error("Error: illegal profiling level value " + level));

|

||||

}

|

||||

|

||||

// Set up the profile number

|

||||

command['profile'] = profile;

|

||||

|

||||

self.s.db.executeDbAdminCommand(command, function(err, doc) {

|

||||

doc = doc;

|

||||

|

||||

if(err == null && doc.ok === 1)

|

||||

return callback(null, level);

|

||||

return err != null ? callback(err, null) : callback(new Error("Error with profile command"), null);

|

||||

});

|

||||

}

|

||||

|

||||

define.classMethod('setProfilingLevel', {callback: true, promise:true});

|

||||

|

||||

/**

|

||||

* Retrive the current profiling information for MongoDB

|

||||

*

|

||||

* @param {Admin~resultCallback} [callback] The command result callback.

|

||||

* @return {Promise} returns Promise if no callback passed

|

||||

*/

|

||||

Admin.prototype.profilingInfo = function(callback) {

|

||||

var self = this;

|

||||

|

||||

// Execute using callback

|

||||

if(typeof callback == 'function') return profilingInfo(self, callback);

|

||||

|

||||

// Return a Promise

|

||||

return new this.s.promiseLibrary(function(resolve, reject) {

|

||||

profilingInfo(self, function(err, r) {

|

||||

if(err) return reject(err);

|

||||

resolve(r);

|

||||

});

|

||||

});

|

||||

};

|

||||

|

||||

var profilingInfo = function(self, callback) {

|

||||

try {

|

||||

self.s.topology.cursor("admin.system.profile", { find: 'system.profile', query: {}}, {}).toArray(callback);

|

||||

} catch (err) {

|

||||

return callback(err, null);

|

||||

}

|

||||

}

|

||||

|

||||

define.classMethod('profilingLevel', {callback: true, promise:true});

|

||||

|

||||

/**

|

||||

* Validate an existing collection

|

||||

*

|

||||

* @param {string} collectionName The name of the collection to validate.

|

||||

* @param {object} [options=null] Optional settings.

|

||||

* @param {Admin~resultCallback} [callback] The command result callback.

|

||||

* @return {Promise} returns Promise if no callback passed

|

||||

*/

|

||||

Admin.prototype.validateCollection = function(collectionName, options, callback) {

|

||||

var self = this;

|

||||

var args = Array.prototype.slice.call(arguments, 1);

|

||||

callback = args.pop();

|

||||

if(typeof callback != 'function') args.push(callback);

|

||||

options = args.length ? args.shift() : {};

|

||||

options = options || {};

|

||||

|

||||

// Execute using callback

|

||||

if(typeof callback == 'function')

|

||||

return validateCollection(self, collectionName, options, callback);

|

||||

|

||||

// Return a Promise

|

||||

return new this.s.promiseLibrary(function(resolve, reject) {

|

||||

validateCollection(self, collectionName, options, function(err, r) {

|

||||

if(err) return reject(err);

|

||||

resolve(r);

|

||||

});

|

||||

});

|

||||

};

|

||||

|

||||

var validateCollection = function(self, collectionName, options, callback) {

|

||||

var command = {validate: collectionName};

|

||||

var keys = Object.keys(options);

|

||||

|

||||

// Decorate command with extra options

|

||||

for(var i = 0; i < keys.length; i++) {

|

||||

if(options.hasOwnProperty(keys[i])) {

|

||||

command[keys[i]] = options[keys[i]];

|

||||

}

|

||||

}

|

||||

|

||||

self.s.db.command(command, function(err, doc) {

|

||||

if(err != null) return callback(err, null);

|

||||

|

||||

if(doc.ok === 0)

|

||||

return callback(new Error("Error with validate command"), null);

|

||||

if(doc.result != null && doc.result.constructor != String)

|

||||

return callback(new Error("Error with validation data"), null);

|

||||

if(doc.result != null && doc.result.match(/exception|corrupt/) != null)

|

||||

return callback(new Error("Error: invalid collection " + collectionName), null);

|

||||

if(doc.valid != null && !doc.valid)

|

||||

return callback(new Error("Error: invalid collection " + collectionName), null);

|

||||

|

||||

return callback(null, doc);

|

||||

});

|

||||

}

|

||||

|

||||

define.classMethod('validateCollection', {callback: true, promise:true});

|

||||

|

||||

/**

|

||||

* List the available databases

|

||||

*

|

||||

* @param {Admin~resultCallback} [callback] The command result callback.

|

||||

* @return {Promise} returns Promise if no callback passed

|

||||

*/

|

||||

Admin.prototype.listDatabases = function(callback) {

|

||||

var self = this;

|

||||

// Execute using callback

|

||||

if(typeof callback == 'function') return self.s.db.executeDbAdminCommand({listDatabases:1}, {}, callback);

|

||||

|

||||

// Return a Promise

|

||||

return new this.s.promiseLibrary(function(resolve, reject) {

|

||||

self.s.db.executeDbAdminCommand({listDatabases:1}, {}, function(err, r) {

|

||||

if(err) return reject(err);

|

||||

resolve(r);

|

||||

});

|

||||

});

|

||||

}

|

||||

|

||||

define.classMethod('listDatabases', {callback: true, promise:true});

|

||||

|

||||

/**

|

||||

* Get ReplicaSet status

|

||||

*

|

||||

* @param {Admin~resultCallback} [callback] The command result callback.

|

||||

* @return {Promise} returns Promise if no callback passed

|

||||

*/

|

||||

Admin.prototype.replSetGetStatus = function(callback) {

|

||||

var self = this;

|

||||

// Execute using callback

|

||||

if(typeof callback == 'function') return replSetGetStatus(self, callback);

|

||||

// Return a Promise

|

||||

return new this.s.promiseLibrary(function(resolve, reject) {

|

||||

replSetGetStatus(self, function(err, r) {

|